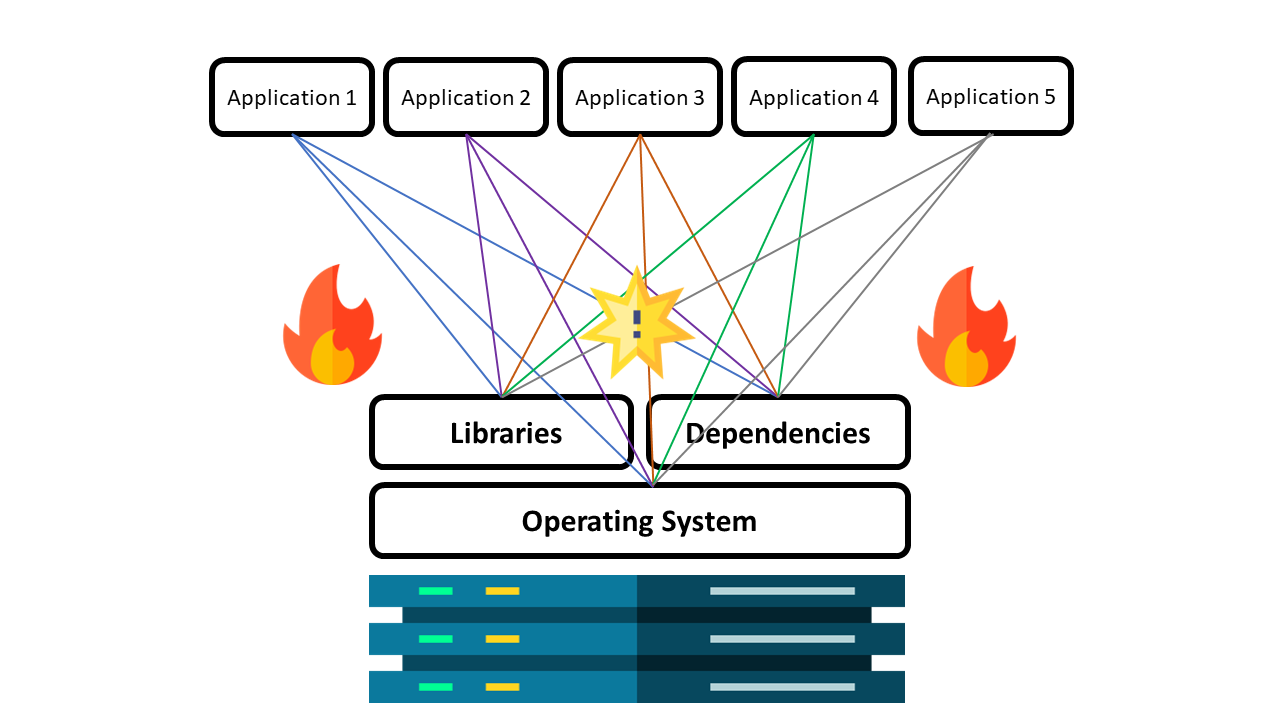

What is the Dependency Matrix Hell?

Dependency matrix hell, often referred to as "dependency hell," is a situation that occurs when different software applications require different versions of the same library or framework. This can result in compatibility issues, version conflicts, and system instabilities, making software deployment and maintenance a complex and time-consuming task.

What is a Container?

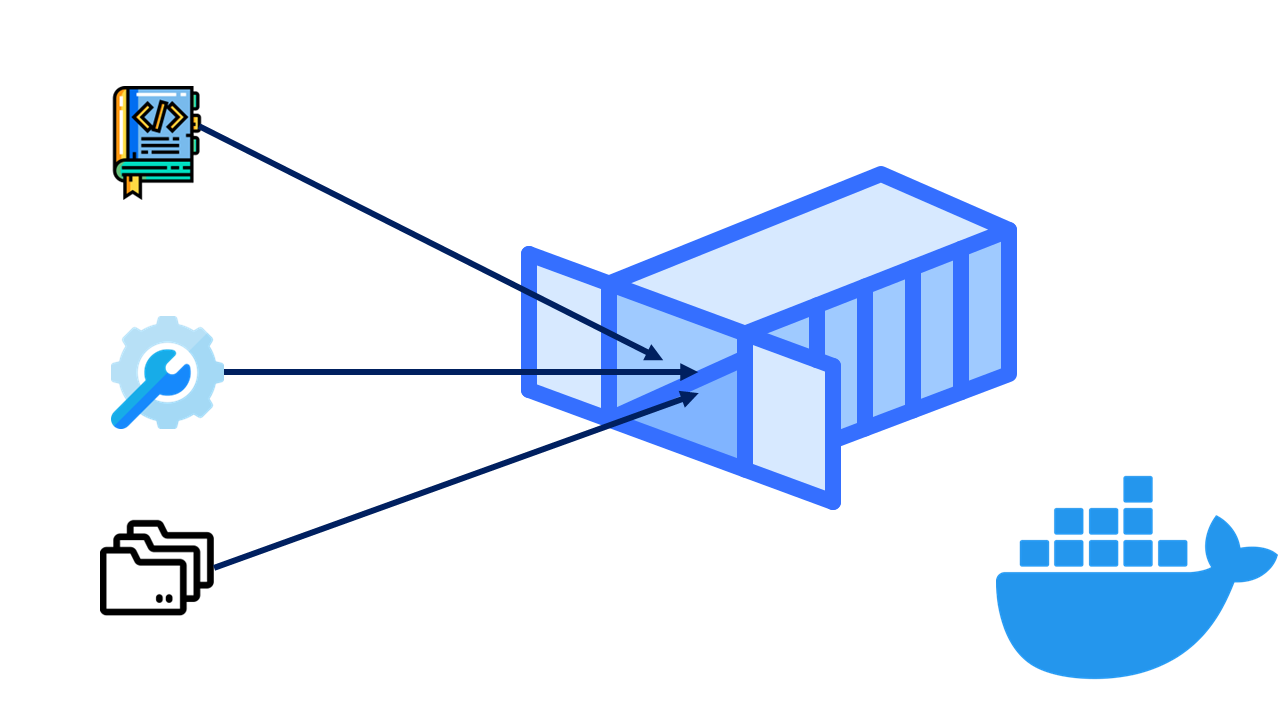

A container is a standard unit of software that packages up code and all its dependencies so that the application runs quickly and reliably from one computing environment to another. It encapsulates the software in a complete file system that contains everything needed to run: code, runtime, system tools, libraries, and settings. This leads to a consistent operation across various computing environments, mitigating the effects of dependency hell.

What is Docker?

Docker is an open-source platform that automates the deployment, scaling, and management of applications. It achieves this using containerization. Docker allows developers to package an application with all of its dependencies into a standardized unit for software development. It simplifies software delivery by making it independent of the system, much like shipping containers in logistics.

The Origins and Etymology of 'Container'

The term 'container' in the context of software development was popularized by the advent of Docker in 2013. However, the concept goes back much further. In Unix-like operating systems, 'chroot' system call introduced the first form of containerization in the late 1970s. The term 'container' is a metaphor that refers to the shipping industry, where standardized containers are used to transport various goods in a simple and efficient manner.

Why Do We Containerize?

Docker is a powerful tool that finds widespread use due to several compelling reasons. It offers significant benefits in three key areas:

1. Container Isolation

Docker provides container isolation, ensuring that applications and their dependencies are encapsulated within a self-contained unit. This isolation prevents conflicts and guarantees consistent application performance across various environments. By leveraging containerization, managing software deployments becomes easier and more efficient.

2. Simplified Environment Management

One of the primary challenges in software development is dealing with environment inconsistencies and complexity. Docker tackles this issue by packaging applications and their dependencies into containers. Developers can bypass the difficulties of setting up and maintaining complex development environments. This streamlined approach enables smoother collaboration, eliminates the notorious "it works on my machine" problem, and ensures consistent environments for all team members.

3. Accelerated Development and Deployment

Docker empowers teams to develop, test, build, and deploy software faster. With containers, developers can quickly spin up isolated environments for testing, reproduce production-like setups with ease, and deploy applications consistently across different platforms. This streamlined workflow results in shorter development cycles, faster feedback loops, and prompt delivery of value to end-users.

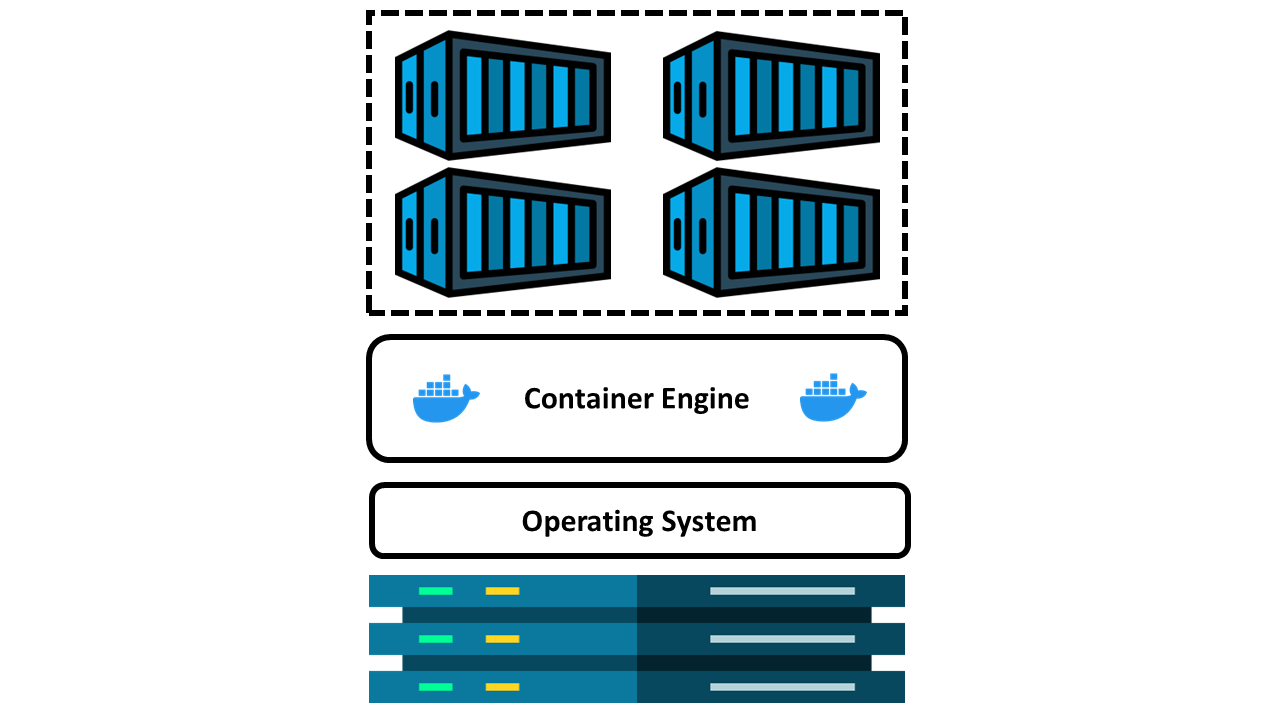

How Does Docker Work on a Computer?

Docker works on a computer by interacting with the underlying hardware and the operating system to create and manage containers.

On the hardware level, Docker uses the physical resources of the host machine, such as CPU, memory, and I/O devices. These resources are shared among the containers, which makes Docker a lightweight and efficient system compared to VMs that need separate resources for each instance.

The operating system plays a crucial role in Docker's functioning. Docker uses a component of the operating system known as the kernel. The kernel is responsible for managing hardware resources and providing services to other software. Docker, specifically, interacts with the kernel's features like cgroups for resource isolation and namespaces for process isolation, which together provide the foundation for containerization.

The Docker engine is the application installed on the host machine that enables Docker functionality. It's responsible for building and running Docker containers, using Docker images as the blueprint. The Docker engine communicates with the kernel to leverage its features for containerization, and it manages aspects such as networking between containers, attaching storage, and monitoring the status of containers.

Containers are the end products managed by Docker. Each container is an isolated unit of software that contains an application and all its dependencies. A container interacts with the operating system via the Docker engine, which abstracts the complexity of the underlying system and provides the application inside the container with an environment that looks like a separate machine.

By using the hardware, operating system, Docker engine, and containers, Docker enables developers to package applications into self-sufficient units that can run anywhere Docker is installed, regardless of the underlying operating system or hardware configuration.

The Role of a Container Engine

A container engine is a software application that manages the lifecycle of containers. It provides an environment where developers can build, test, run, and orchestrate containers. Examples include Docker, containerd, and CRI-O. Container engines are critical for creating portable applications and ensuring they run consistently across different computing environments.

The Benefits of Containerization

Containerization brings several benefits:

- Portability: Containers encapsulate everything an application needs to run, ensuring it will operate consistently across different environments.

- Scalability: Containers can be created, duplicated, or stopped at a rapid pace, which is ideal for scaling applications.

- Efficiency: Containers share the host system's OS kernel, reducing the need for duplicate operating system resources.

- Isolation: Each container runs in isolation, ensuring that application processes don't interfere with each other.

Containerization Use Cases

Some key use cases of containerization include:

- Microservices architectures: Containers can host individual microservices, improving scalability and fault isolation.

- CI/CD pipelines: Containers provide consistent and repeatable environments, ideal for build and test processes.

- Hybrid cloud deployments: The portability of containers allows seamless deployment across different cloud providers.

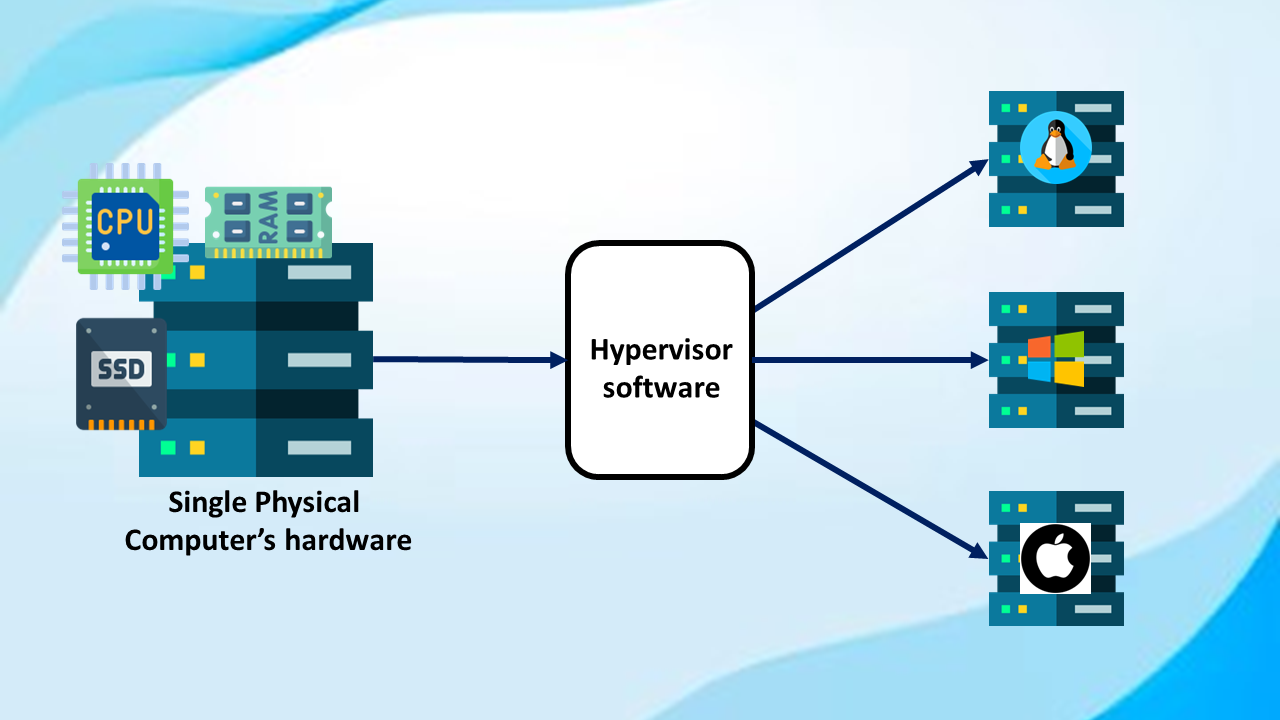

Why Containerizing is Not Enough and We Have to Use VMs?

Although containerization has many benefits, it's not a silver bullet. Containers share the same OS kernel, which can pose a security risk if a container is compromised. Also, some applications may not be suitable for containerization due to their specific system requirements. Virtual Machines (VMs) can address these concerns. They offer strong isolation and can run different operating systems, making them a necessary part of many IT infrastructures.

What Happens if the OS on Which Docker Containers are Running Crashes?

If the host operating system crashes, all running containers on that host would also fail because containers share the host OS's kernel. However, orchestration platforms like Kubernetes can help manage this risk by restarting containers on other available nodes.

Key Difference Between VMs and Containerization

The key difference lies in their architectural approach. A VM is an emulation of a real computer that has its own operating system and resources, while a container shares the host system's OS kernel and uses its resources more efficiently. VMs provide strong isolation but at a cost of greater resource usage, whereas containers are lightweight but may have security concerns due to the shared kernel.

How VMs and Containers May Work Together?

VMs and containers can complement each other in what's often referred to as a 'hybrid' approach. VMs can provide a secure and isolated environment to host a group of containers. This combination can offer the best of both worlds – the resource efficiency of containers and the strong isolation of VMs.

Conclusions

Containerization, embodied by technologies like Docker, has revolutionized software development and deployment by addressing dependency hell and offering an efficient, scalable, and portable solution. However, it's not a standalone solution. VMs remain critical for their strong isolation capabilities and ability to host diverse operating systems. The choice between VMs and containers—or the decision to use both—is dependent on the specific needs of an application or a system. As with many things in technology, it's about using the right tool for the right job.